Artificial intelligence

First mentioned in the 1950s, the phrase “Artificial Intelligence” describes the field of computer science that aims to create systems who’s performance is indistinguishable from that of a human. Areas of applications include:

- Learning from data (machine learning)

- Understanding language (natural language processing, NLP)

- Recognizing / generating images or objects (computer vision)

- Making decisions (planning, optimization)

Initial systems relied on explicitly modeling knowledge and using logic to reason on it. Newer systems apply techniques adapted from neural science, enabling systems to learn and transform knowledge, hence skipping the need to model knowledge.

Note that Generative AI with its ability to create and flagship tools like ChatGPT (NLP) or Midjourney (images) are only the latest subtype of a long line of innovations in this field.

Gen AI

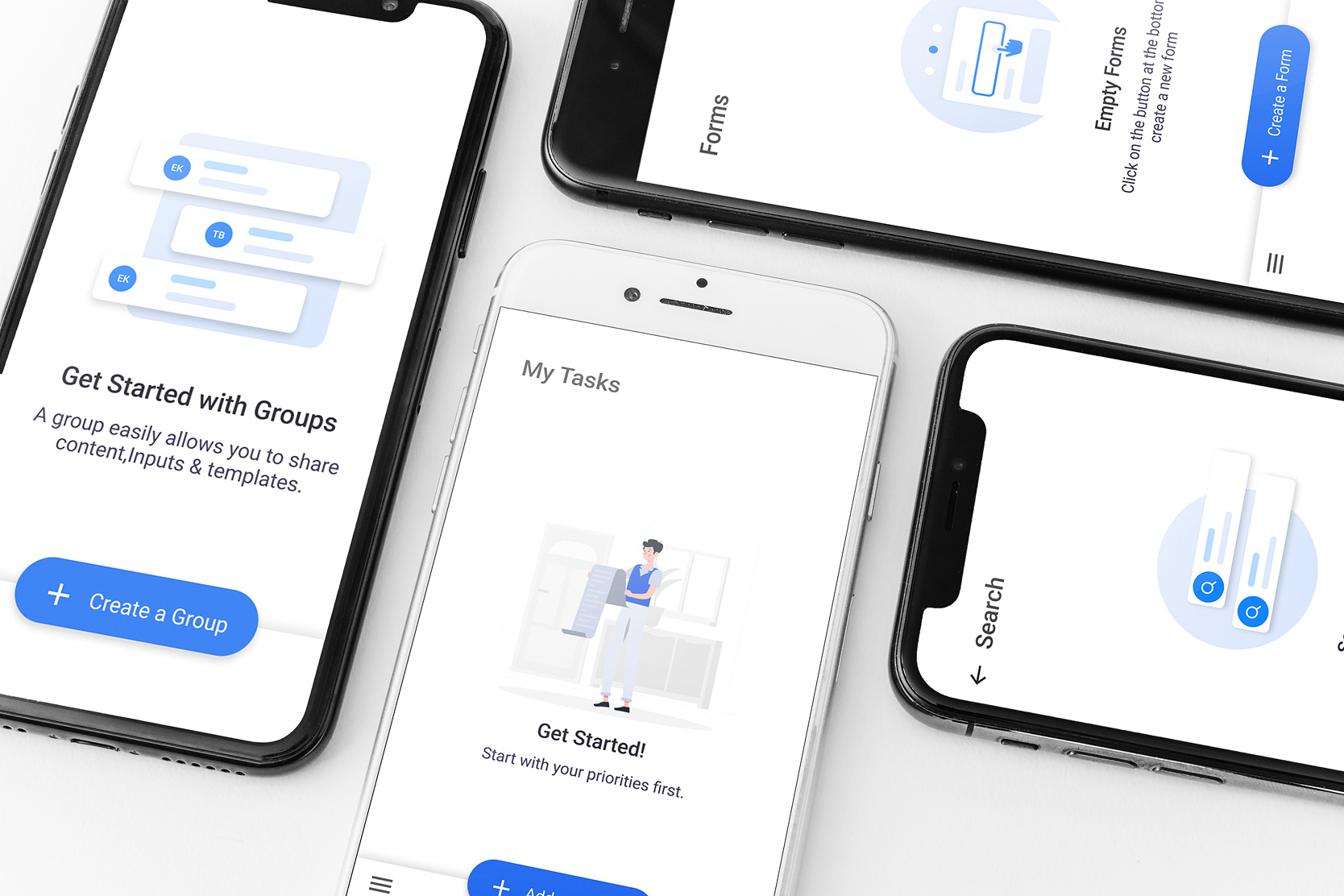

Gen AI or generative AI is a type of artificial intelligence. Compared to other types of AI, it has the ability to create: emails, software code, designs, pictures, music videos, amongst many things. The output type is dependent on the training the gen AI deep learning model receives. Gen AI does not refer to a specific piece of software, but rather it describes software that can understand and produce in a fashion similar to humans.

Foundational models

Foundational models are the basis for generative AI. They are learning models trained on massive amounts of data that create expansive networks of relationships between words and attributes. Training a foundational model is very time-and cost intensive and only a few exist in the world. Luckily, one foundational model can be used as the basis for many different AI applications. For example, a foundational model turns into a Large Language model or a Text-to-image generator once fine-tuned with application-specific data.

“The new developments are rarely a revolution, and more likely an evolution based on a previously existing concept.”

LLM

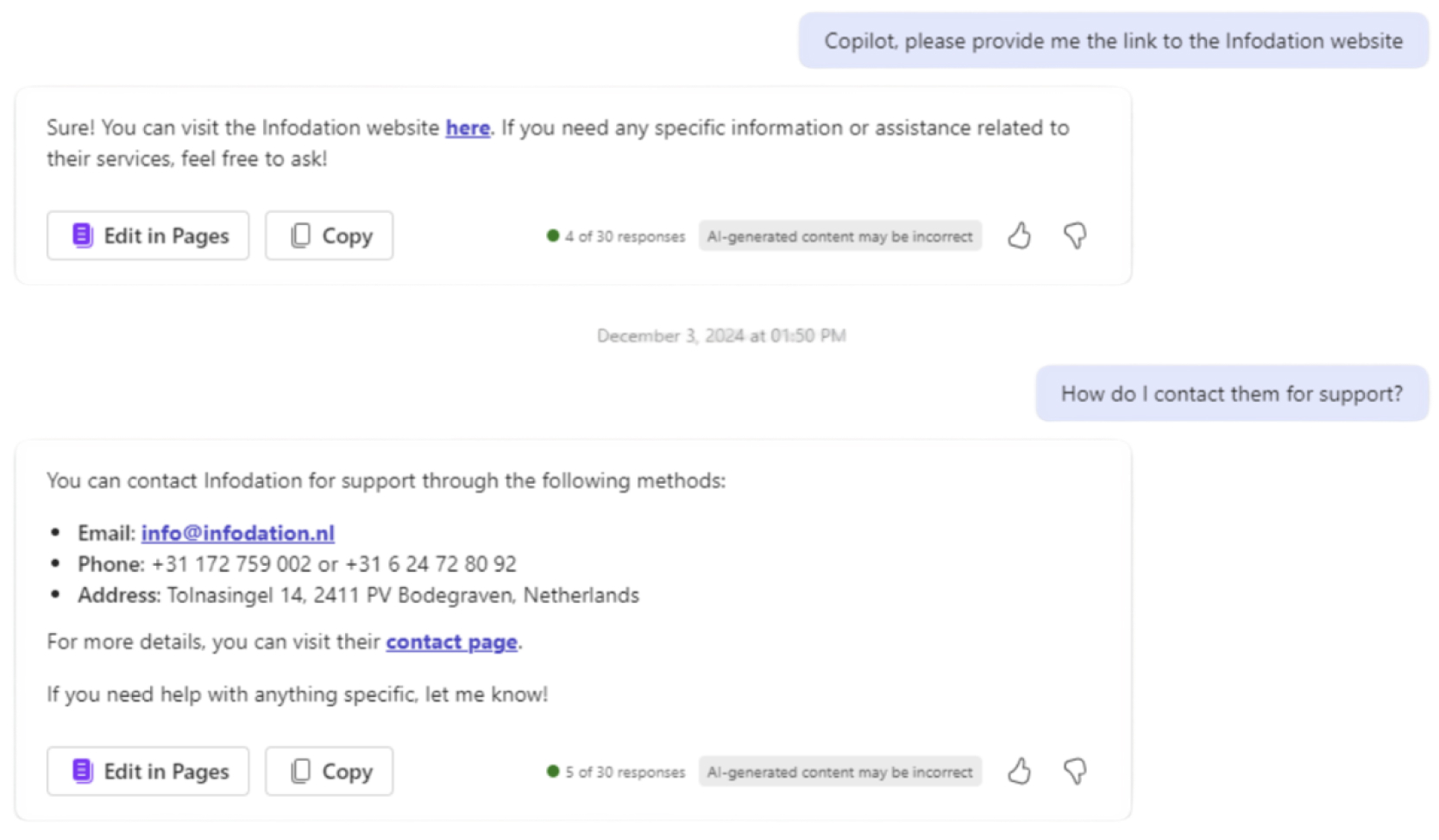

LLMs or Large Language Models are trained foundational model, specifically fine-tuned for language related tasks such as writing, translating or summarizing. Large Language models are trained to recognize the statistically most likely next word in the generated sentence structure. They are the construct behind ChatGPT and Copilot.

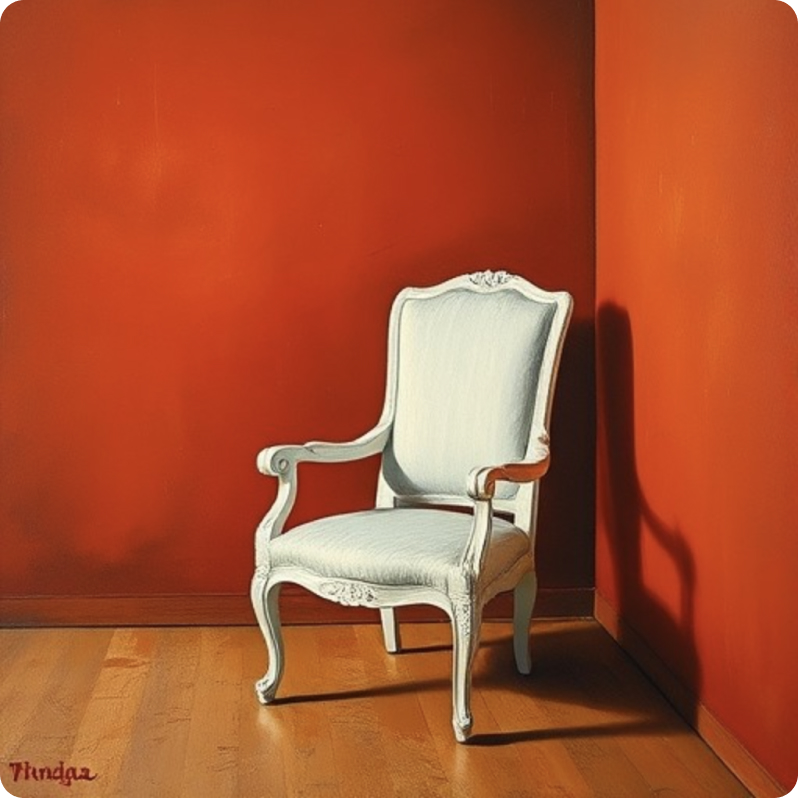

Text-to-image generators

Text-to image generators such as DALL-E or Midjourney can create images based on text prompts. They are a fascinating combination of multiple AI technologies some of which were developed in the early 2010s. Depending on your choice of image-creator, the created picture can vary greatly, as the chosen foundational model and the images used to fine tune the model heavily impact the final result.

The following two images where created by the same prompt: please generate an image: a white chair in a red room, soft lighting, van gogh, postimpressionism

Source: Copilot

Source: www.deepai.org

Fine tuning

Once the foundational model has finished training, it can be fed with additional (smaller amounts of) data to allow for more context specific application. This process is called fine-tuning. Here are three examples of fine-tuning:

- Copilot has been fine-tuned to be able to detect emotion in a piece of text

- ProFound AI has been fine-tuned to detect breast cancer in mammograms

- OpenArt DnD has been fine-tuned to create epic-looking DnD character portraits

Fine-tuning AI models requires both training and testing data, and the quality of this data directly affects the results. Because AI models handle complex problems and data only ever represents a part of reality, achieving a 100% success rate is impossible. The person in charge of fine-tuning must balance achieving good model performance with the time, money, and effort spent on the process.

Prompting

Prompting refers to the act of providing cues or stimuli to evoke a response or action. In the context of AI, prompting involves giving specific instructions or input to an AI model to generate a desired output, for example “Provide me the link to the Infodation website”. This can include asking questions, providing scenarios, or giving commands to guide the AI’s responses.

Conclusion

While working with generative AI has a threshold as low as making an account at OpenAI and writing a sentence in a text field, understanding generative AI feels like a task that requires multiple PhD’s and a standing appointment in your calendar to keep up with the newest developments. For most of us, this effort is impossible to justify.

Luckily, like so many IT advancements, generative AI is strongly foundational; The new developments are rarely a revolution, and more likely an evolution based on a previously existing concept. Spending time to learn the basics now will give you the chance to understand the advantages and pitfalls of current and future developments and set you up with a more productive relationship with generative AI long term. It is a technology worth investing your time into.