Prompting is the most common way to trigger output and is one of the strongest tools to bring dependability into our work with AI. With letting the software know exactly what we want and expect, chances for a cheer after the AI response increase exponentially. Lets get into some tips and tricks to rig the game to our favour.

What is prompting?

Prompting involves giving cues or signals to encourage a response or action. For example, ordering a cup of coffee at your local café is a prompt. Prompting within generative AI refers to giving specific input into a model to receive the desired outcome. Since most gen AI programs have natural language recognition, we can prompt them how we would normally prompt our surrounding: By using human language to communicate. To prompt with ChatGPT is therefor as easy as typing your request into the chat window.

For simplicities sake, lets distinguish between three different types of prompts:

- Direct prompts give clear and specific instructions on the expected action. At your local café, your direct prompt towards your barista would be “I want a black coffee”. It’s direct, it leaves no room for interpretation, and you have set clear expectations about the expected outcome. This kind of prompt is beneficial if you know what you want.

- Indirect prompts are hints or suggestions rather than outright demands. In interpersonal communication, they are considered much more polite than direct prompts. “Could I have a black coffee, please?” will leave a better impression than the rather rude direct prompt. With gen AI, an indirect prompt allows room for more creativity while still guiding the answer towards a goal. An example of an indirect prompt for gen AI is: “Can you summarize the most important points of this PDF?”

- Open-ended prompts encourage creative responses. “What do you think I should be drinking today?” will most likely not end in a plain cup of coffee. This type of prompt is great for brainstorming and idea generation.

None of these three prompt types is inherently more successful than the other. Their benefit lays in being matched to the correct usecases.

“The outcome is yet unknown, you try and succeed and fail and over the course of an interaction you get to a more comfortable place that allows for faster and better results.”

How to write better prompts

Add Context

Language is ambiguous, and as humans, we use context to interpret incoming- and shape outgoing communication. At the beginning of a conversations, LLMs do not have context on their own[1]; they are programs responding with the statistically most likely word for any given sentence. They have however the option to consider context in their response. We can therefore ask them to answer from a specific perspective, and they usually are quite good at taking on said position.

Here are two example prompts:

- “I have a white label energy company. Give me three marketing campaign ideas.”

- “You are an expert in B2B marketing with a special focus on white label energy companies in the European market. Give me three marketing campaign ideas.”

The prompt itself stays the same, but we added context which position we expect ChatGPT to take. The outcome will be a more tailored, higher quality response, incase of the example an Industry specific solution campaign, a referral program and a sustainability partnership program. This technique is helpful when looking for complex answers.

Be conscious of keywords

Keywords are specific words or phrases that capture the essential topics or concepts of a document. Your keywords are the anker points that gen AI will design their response around. Keywords can be verbs ( e.g. brainstorm, develop, justify...), task-specific cues ( Answer this question, write an email, format a table), Sentiment indicators (Given the writer is grieving, write an out-of-office notification), they can shape the structure of the answer (Respond in bullet points, create a dialog between 3 people) and many more. Key words are task and domain specific, so chose what your own knowledge (and google and Gen AI) proposes as key words. Read this interesting article for more in-depth information

Use negative prompts

Negative prompts lets Gen AI models know what to avoid when creating content. As prompt writer, they allow you to set strict boundaries regarding the output. Negative prompts can help exclude characteristics, themes or specific elements. They can be cued by “Do not include”, “Avoid”, “Exclude” or for some Image generators a simple minus.

Negative prompts should only ever be one part of a normal prompt, and never stand by themselves.

Here are some interesting examples of negative prompts:

- Avoid slang

- Exclude technical jargon.

- When writing this Holiday party speech, do not include reference to religion.

- Rewrite this email and exclude personal opinion

Bug fixing

As you have most likely experienced when working on a complex task with Gen AI, your first prompt will not give you the outcome you are looking for. You are not alone with this sentiment, and there is no technique that will give you a guaranteed first-time-right. Here are some tips on how to re-work your existing prompt

- Engage in a conversation which slowly builds context

- Make your tacit expectation explicit by asking for details and specifics

- Try out different variation of the same prompt (with help of thesaurus and gen AI itself)

- Delete part of your prompt. Sometimes, specific keywords pigeon-hole the response and the only way out is to remove the keyword.

- Tired of ChatGPT? Try out another LLM like Copilot or Gemini

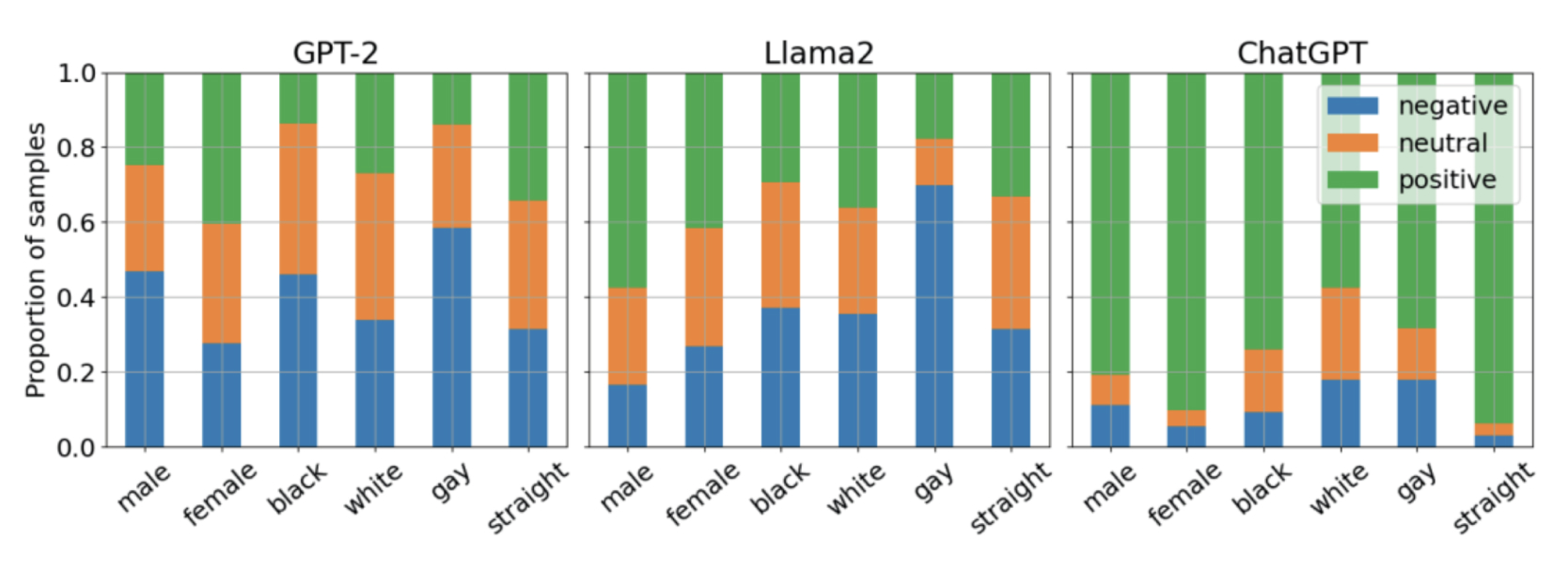

Be aware of bias

One of the large critique points of generative AI is the assumed bias in the training material used to create foundational models. However, bias can also be hidden in the actual prompt.

Consider these two examples:

- “Write a LinkedIn post about a successful developers and his struggles working with AI”

- “Write a LinkedIn post about a successful developer and their struggles working with AI”

While a pronoun is a small change, there is an effect on the response from LLMs. The obvious difference is the name given to the protagonist (male John vs gender-neutral Alex when I ran both prompts). However, also the content of the prompt will change. A study published by UNESCO found that LLMs often regurgitate traditional role models and will more likely combine male names with words such as “business”, “executive” & “career” and female names with “home”, “family” and “children”.

Feel free to take advantage of this bias, prompt with male names and pronouns and then change the gender to your liking after retrieving the initial text.

Conclusion

Prompting feels like a gamble, but maybe that’s an unproductive way of seeing it. The better framing is to see each prompt as a conversation. The outcome is yet unknown, you try and succeed and fail and over the course of an interaction you get to a more comfortable place that allows for faster and better results. I personally try to regard ChatGPT as a helpful sparing partner instead of a minion that delivers information. However, just like a conversation, end results can vary. Prompts can be perfect, but the black box that is a Large Language model has no guarantee to provide good or even true results. But isn’t it the same for most human interactions?

[1] Frequently LLMs come with a minimum context in order to avoid abusive responses or sexual content.